We provide support for many mainstream big models, users need to buy big model API KEY. which big model is the most suitable for you, which is more cost-effective, the following gives you some reference information.

Before making a price comparison, we first need to figure out what a token is. In the field of Artificial Intelligence and Natural Language Processing, Token is the basic unit of text after segmentation. The number of English words contained in a Token is not fixed. In English, common short words such as "the" "and" are a Token, while longer words such as "hesitation" are also a Token. As a rough estimate, on average, an English Token may correspond to 3–5 letters.

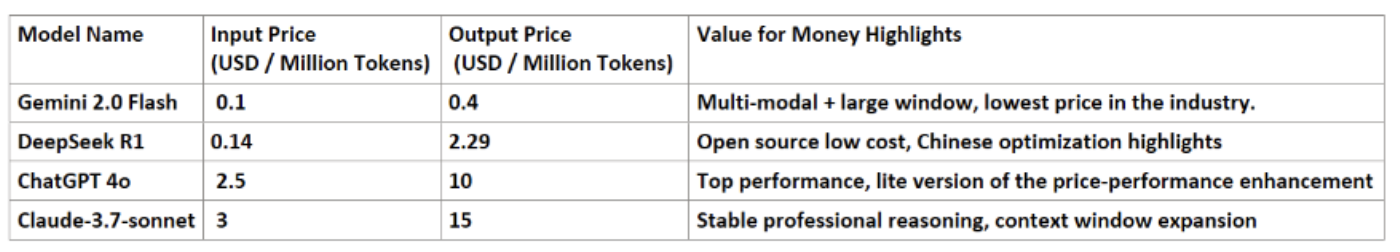

Here we compare four popular AI models to give you a reference.

Gemini 2.0 Flash

Input $0.1/million Tokens, Output $0.4/million Tokens (Note: its lite version, Gemini 2.0 Flash-Lite, is priced as low as $0.0075/million Tokens).

Industry-leading multimodal processing capability, context window up to 1 million Tokens, suitable for multimedia content generation, data analysis and other scenarios, price is only 1/25 of ChatGPT 4o.

The best overall price/performance ratio, especially for budget-sensitive users who need multimodal capabilities.

DeepSeek R1

Input $0.14/million Tokens (latest data), output $2.29/million Tokens.

open source model, training cost is only 1/20 of GPT-4, high inference efficiency, suitable for lightweight tasks (e.g. code generation, mathematical reasoning).

Cost-effective for Chinese scenarios, but the output cost is still higher than Gemini.

ChatGPT 4o

$2.5/million Tokens for input, $10/million Tokens for output (note: the price of its lite version, GPT-4o mini, is as low as $0.15 for input and $0.6 for output).

Top language understanding and reasoning capabilities, supports multi-domain applications.

Best price/performance ratio among traditional high-end models, but significantly higher output costs than Gemini and DeepSeek.

Claude-3.7-sonnet

$3/million Tokens input, $15/million Tokens output.

Stable performance in specialized areas (e.g., programming, complex reasoning), with a context window of 128K Tokens.

suitable for professional scenarios, but the price is less competitive. Priced at the same level as its predecessor, the Claude 3.5 Sonnet.

| Model | Input Price(USD/Million Tokens) | Output Price(USD/Million Tokens) | Value for Money Highlights |

|---|---|---|---|

| Gemini 2.0 Flash | 0.1 | 0.4 | Multi-modal + large window, lowest price in the industry |

| DeepSeek R1 | 0.14 | 2.29 | Open source low cost, Chinese optimization highlights |

| ChatGPT 4o | 2.5 | 10 | Top performance, lite version of the price-performance enhancement |

| Claude-3-7-Sonnet | 3 | 15 | Stable professional reasoning, context window expansion |

In terms of price, performance, and scene suitability, Gemini 2.0 Flash is the best solution for the current price/performance ratio due to its multimodal capabilities and extremely low cost. If you want to further reduce the cost, you can pay attention to its Lite version or the open source ecosystem of DeepSeek R1.