PapersGPT is an AI Chat plugin powered by lots of the smartest SOTA LLMs for reading and understanding papers in Zotero, especially for serious study and work scenarios.

Download here for Zotero 7.

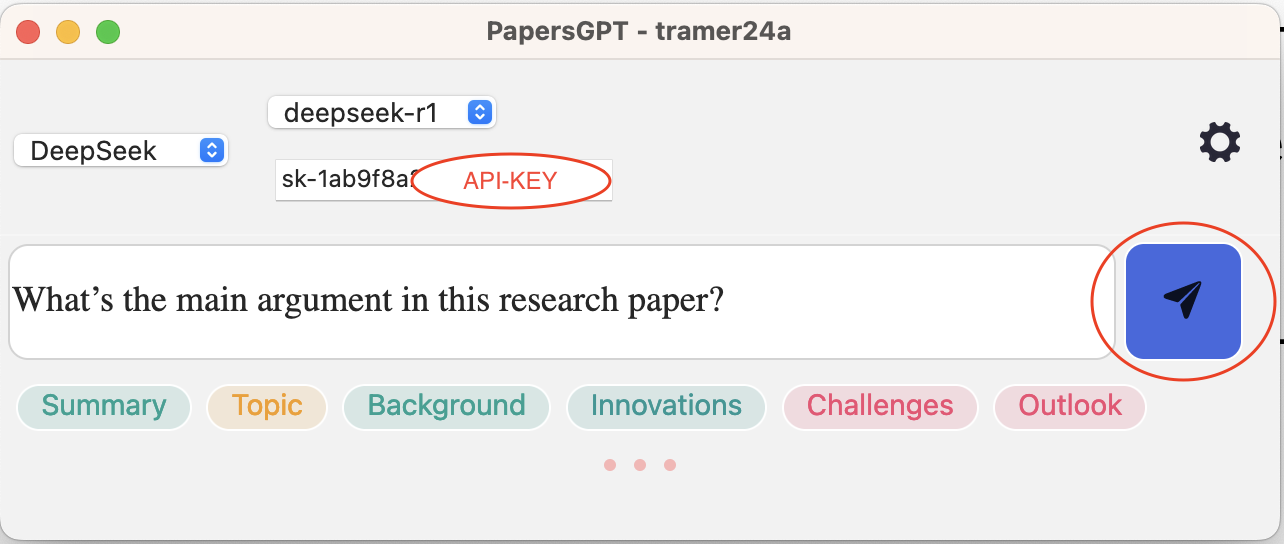

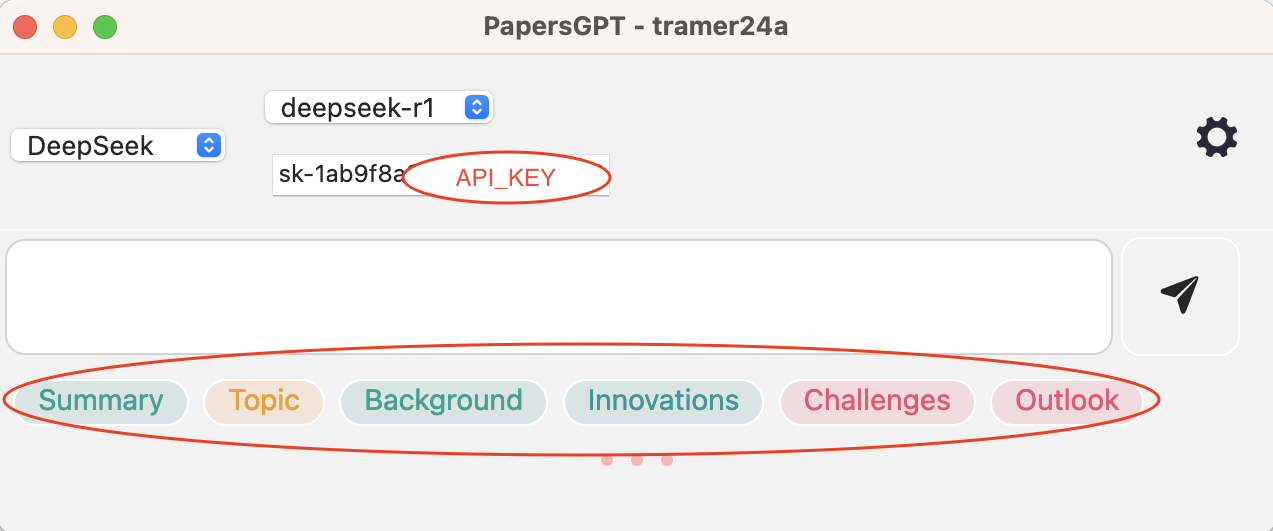

When using GPTs, DeepSeek, Claude or Gemini, remember to set your own API KEY. For example, if you want to use gpt-4-turbo, gpt-4o or other gpt serial models, you should set your own OpenAI key, so as to the usage of DeepSeek, Claude-3 and Gemini. Now gpt-4, deepseek, claude-3 and gemini serial models are all supported.

For DeepSeek API Key, please go to the DeepSeek API platform - https://api-docs.deepseek.com to apply.

For OpenAI, Anthropic and Gemini API Key, please go to the API platform of the corresponding model to apply.

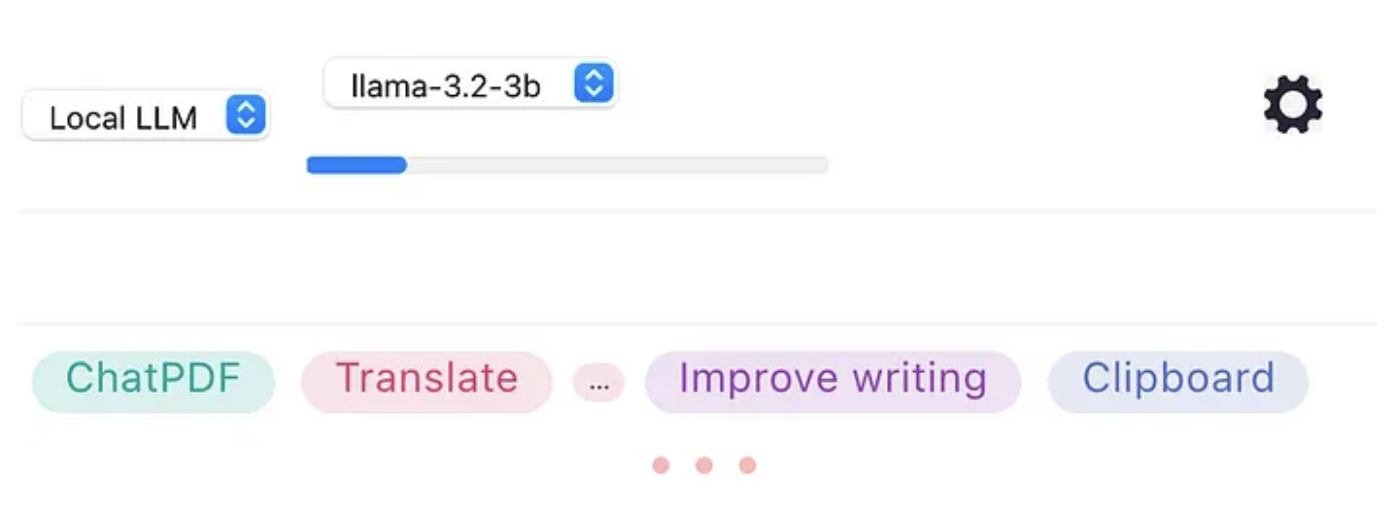

If you are Mac users, no API KEY would be needed, all SOTA open source models are available!

Now Llama3.2, Gemma3, DeepSeek R1, QwQ-32B, Marco and Mistral can all be choosed by just one click in plugin without manualy installing many boring additional tools or softwares.

LLM will be downloaded and deployed automatically for the first time, you can get the download process status from the ‘process bar’. Then enjoy it.

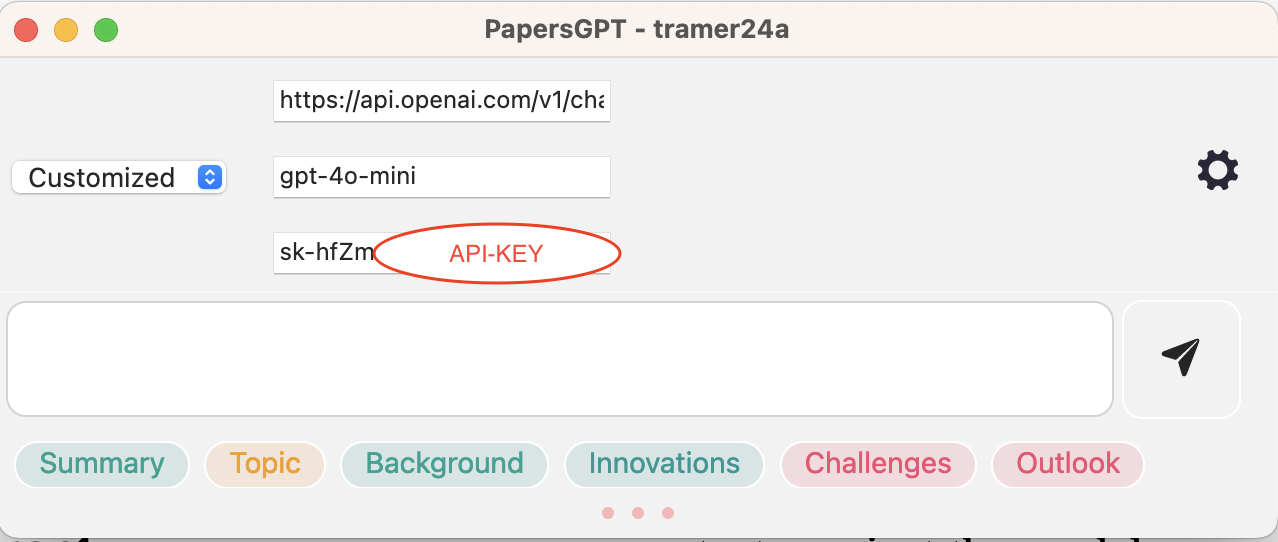

If you want to use a LLM which is not integrated in the papersgpt, you can try 'Customized' funtion.

Here is a manual config steps for OpenAI, you can take it as reference.

Step 1. Select the item of 'Customized'

Step 2. Fill the three lines.

line 1: https://api.openai.com/v1/chat/completions

line 2: model name

line 3: api-key

Open the file you want to chat, after starting the plugin, select the LLM, then chat pdf.

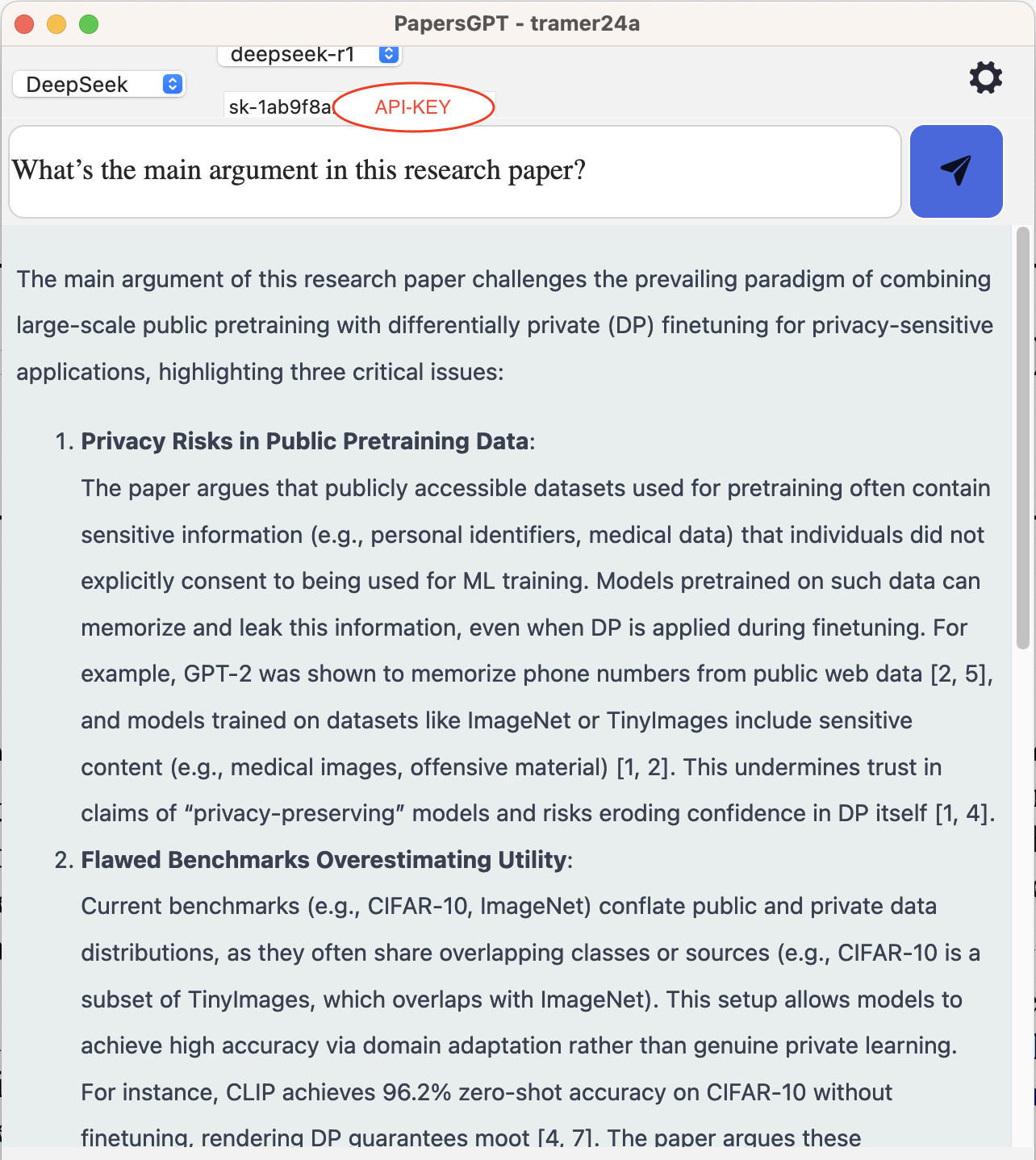

Click the ‘paper aeroplane’ button on the right to chat with it. The responses reference the relevant pieces of the original document.

Here are some examples of what you can ask:

"What’s the main argument in this research paper?"

"Can you explain the steps in this process?"

"Summarize the main points in this essay."

Below is the answer of your question.

You can also ask quick common questions with buttons to get a general idea of your paper.

PapersGPT provide 6 buttons for quick common questions,click the buttons to chat pdf and get a general idea.

Here is what the different buttons represent.

Summary Summarize the main points in this paper.

Topic Define the topic of the paper clearly.

Background Understand the historical context of the topic talking about in the paper.

Innovations List the main contributions or innovations of this paper.

Challenges Identify the challenges and criticisms.

Outlook Explore future directions and the potential.

All SOTA online business LLMs are available

Easily access to all the best LLM models, such as Gemini 2.5 Pro, Gemini 2.5 Flash, DeepSeek R1, DeepSeek V3, o4-mini, o3-mini, o1-mini, GPT-4.1 and Claude 3.7 Sonnet with your own API Key. With the PapersGPT, it is very easy to select and change the LLM model they prefer, not only GPTs. OpenRouter is also integrated in PapersGPT, almost all the SOTA models are there, so you can use your OpenRouter API Key to access all the SOTA models.

Now Gemini 2.5 Pro is the No.1 model on the Chatbot Arena LLM leaderboard, besides Gemini 2.5 Pro, Gemini 2.5 Flash, Claude 3.7 Sonnet, DeepSeek R1 and DeepSeek V3 are all very great, they all are strongly recommended to try other than ChatGPT and o1/o3/o4-mini.

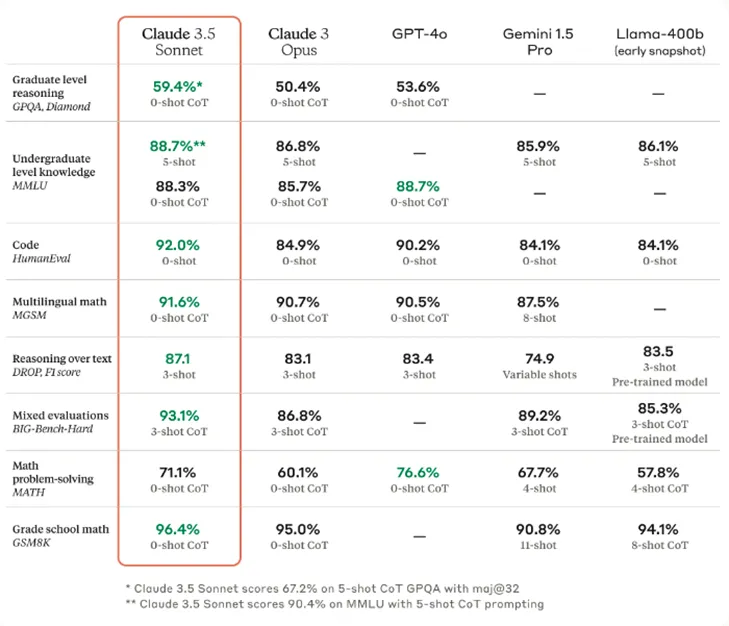

For example, someone want to use Claude to chat with the pdfs online. Because Anthropic’s medium-sized model Claude 3.5 Sonnet outperforms gpt-4o (ChatGPT’s latest update, as of this writing) in many ways, particularly those involving reasoning.

From the table provided by Claude (see here) we can see that The Claude 3.5 Sonnet beat out competitors such as the gpt-4o, Gemini 1.5 Pro, and others in 7 out of 9 overall categories. As we know, the Claude 3.5 Sonnet is a medium-sized model, between Haiku and Opus. But its performance was impressive. Graduate-level reasoning (GPQA), undergraduate-level knowledge (MMLU) and text-based problem-solving, which is very important for the chat pdfs apps.

From the table provided by Claude (see here) we can see that The Claude 3.5 Sonnet beat out competitors such as the gpt-4o, Gemini 1.5 Pro, and others in 7 out of 9 overall categories. As we know, the Claude 3.5 Sonnet is a medium-sized model, between Haiku and Opus. But its performance was impressive. Graduate-level reasoning (GPQA), undergraduate-level knowledge (MMLU) and text-based problem-solving, which is very important for the chat pdfs apps.

100% Privacy and Safe of Your Personal Data for Mac users

For Mac users, PapersGPT helps you to chat privately and safely using local LLM, the RAG models of embeddings, vector database and rerank are all built and runned locally, There will be no data leakage and it can be used normally even on the plane when the internet can't be connected.

Local LLMs are all free, no need to purchase online API-KEY

You don’t need to purchase the online model API-KEY. PapersGPT will provide local LLMs for you, just select the local LLM and use it, that’s it. Please notice that chatting function with local LLM is only available for MacOS version.

Local LLM will be continuously updated

Currently the available local LLMs include Gemma 3, Qwen 3, DeepSeek-Distill-Llama, Llama 3.2 and Mistral Nemo. You can choose the model you like. More models with updates will be on the way in the future. Please notice that chatting function with local LLM is only available on MacOS now.